Google Chrome is rolling out new AI-powered features aimed at improving user safety while browsing the web. These tools, currently in testing, are designed to help users assess the credibility of websites and protect them from scams.

The first feature being tested is called "Store reviews." This tool uses AI to generate summaries of reviews from trusted independent platforms like Trustpilot and ScamAdvisor.

These summaries appear in the page info bubble, which is accessible by clicking on the lock icon or the "i" icon next to the website's URL in the address bar. The feature allows users to quickly check the reputation of online stores without having to visit multiple review sites.

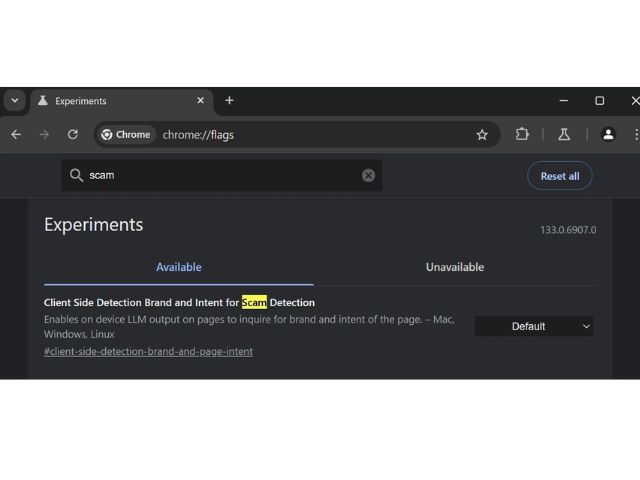

In addition to the store review tool, Google is also testing another AI-powered feature to detect scams. Known as the "Client Side Detection Brand and Intent for Scam Detection," this tool uses a large language model (LLM) to analyse web pages and determine their intent.

By scanning content, the tool assesses whether a page aligns with its supposed brand or purpose, helping to identify potential scams. Importantly, the analysis is done on-device, meaning user data remains private and is not uploaded to the cloud.

The scam detection feature is currently available through Chrome Canary, an experimental version of the browser. Users need to manually enable it by accessing the flags menu, which is where experimental features are typically found.

Photo: BleepingComputer

Photo: BleepingComputer

These features come as part of a broader trend in the tech industry to incorporate AI-driven security measures. Microsoft has also introduced similar scam detection tools in its Edge browser, such as the "scareware blocker," which uses AI to identify tech-related scams.

By leveraging AI to enhance both trust and security, Google aims to reinforce Chrome's position as the dominant web browser while addressing the growing threats posed by online scams and malicious actors.

As AI tools become increasingly central to web browsing, it is likely that more features like these will be rolled out in the future to protect users from evolving risks.